It is an interesting moment for artificial intelligence. For a long time, progress in AI followed a simple rule: make models bigger. More parameters, more data, more compute. This worked, but it also created serious problems. Training large models is expensive, slow, and consumes a huge amount of energy.

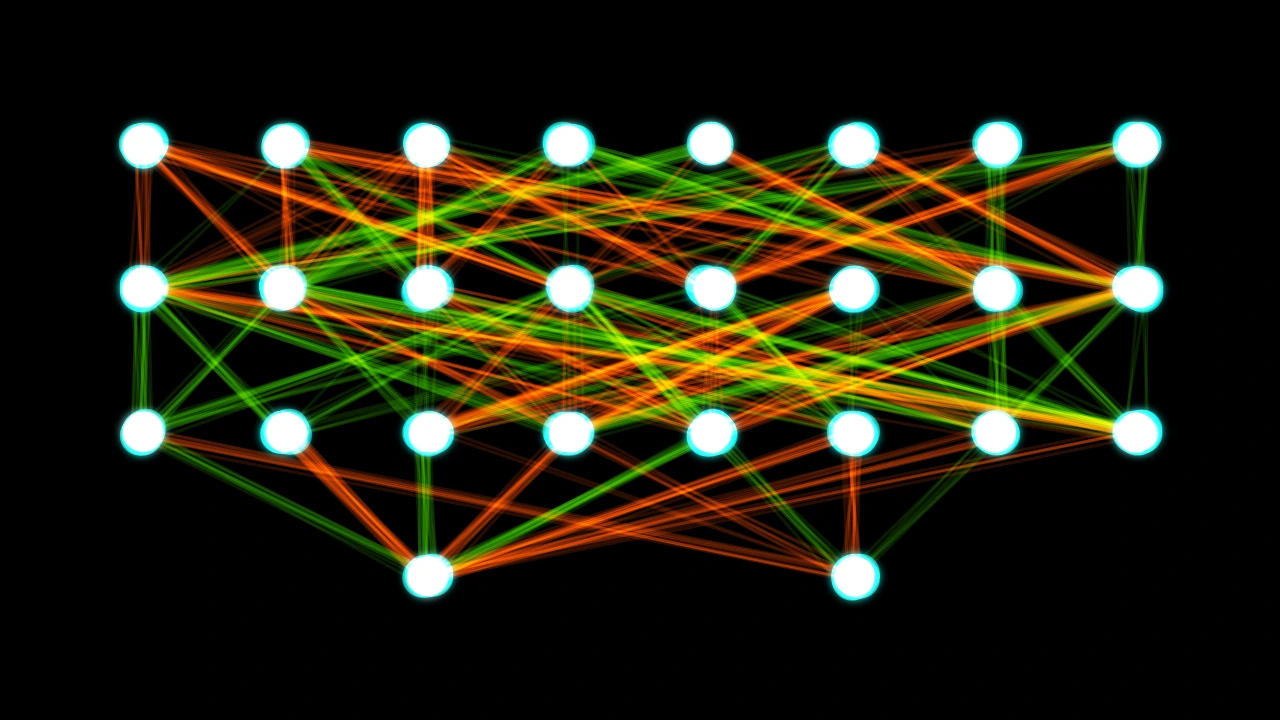

Recently, Nvidia introduced a different idea called Mixture of Experts, or MOE. Instead of activating the entire neural network for every task, the model learns to choose which parts of the system are actually needed. Each part acts like an expert in a specific area. For any given question, only the most relevant experts are used.

This makes the system more efficient and more flexible. It is similar to how people work. You do not ask every person you know for help on every problem. You ask the ones who are best suited for that situation. With MOE, AI systems can grow smarter without becoming unnecessarily heavy.

Many researchers believe this direction is important for the long-term goal of Artificial General Intelligence, or AGI. A system that can handle many different kinds of problems needs more than raw size. It needs decision-making. It needs to know which capabilities to use and when.

This idea is not limited to research papers.

In fact, we have been working with a similar approach in Dvina. From the beginning, we assumed that no single language model or tool would be good at everything. Instead of relying on one model, we built an orchestrator layer that sits on top of multiple models, tools, and data sources.

This orchestrator works as a decision layer. When a user asks a question, it decides which model or resource should be used. Sometimes it uses one. Sometimes it combines several and merges the results. The goal is not to show complexity, but to hide it behind a simple experience.

The result is a system that feels adaptive. You ask a question, and the system figures out how to answer it in the most efficient way. This is very close in spirit to what MOE proposes at the model level, but applied at the system level.

AGI has not arrived yet. But ideas like MOE show that the path forward is not just about scale. It is about structure, coordination, and smarter use of resources.

If you are curious what this kind of approach feels like in practice, you can try Dvina for free at https://dvina.ai. It is not AGI, but it gives a small glimpse into how future AI systems may think and operate.